Data Virtualization with Wiweeki

What is Data Virtualization?

Venkatesh Kalluru, CTO of Wiweeki, defines data virtualization by saying, “If you take the word virtualization, what does virtual data mean? Virtual data means the data is not within your reach, where you can touch and feel it. The same is the case when it comes to data virtualization. Data is not actually residing on your computer, data is sitting in different places, but it makes it look as if it was within your reach.” He continues his definition by adding that “Data virtualization is nothing but providing access to remote data in remote environments.”

Source: What is Data Virtualization?

David Winslow, Sr. Data Scientist at Wiweeki, defines data virtualization as “making the data more presentable to a consumer or end-user.” Data virtualization simplifies the underlying complexities by appearing to the end-user as useful data. Then, they can create reports or do analyses.

Challenges Data Virtualization Aims to Solve

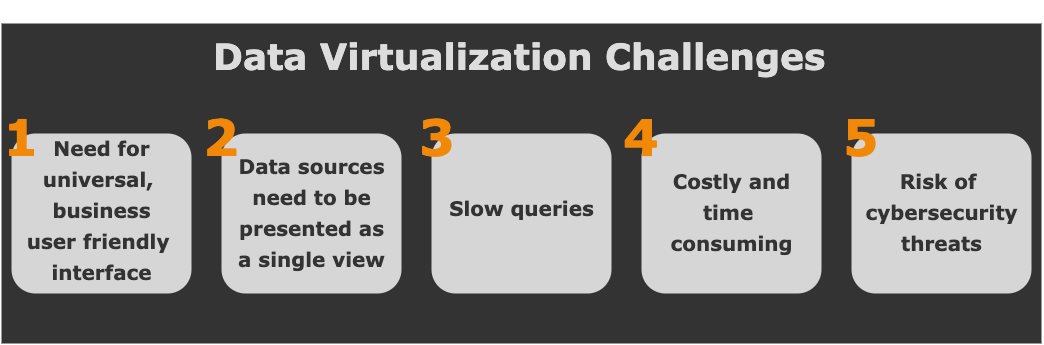

Data virtualization is a necessary technology, proven by the many challenges companies faced before data virtualization became the solution. The challenges included:

- The need for a business user-friendly interface that presents Business Intelligence in a readable, simplified format.

- Data sources need to be presented as a single view to allow for reusability and ensure the most up-to-date data.

- Queries run slow.

- Moving and maintaining data from multiple data sources is costly and time-consuming.

- Sensitive data is at risk of cybersecurity threats.

Challenge Accepted!

Loxio – A Self Service Data Lake and Analytics Platform

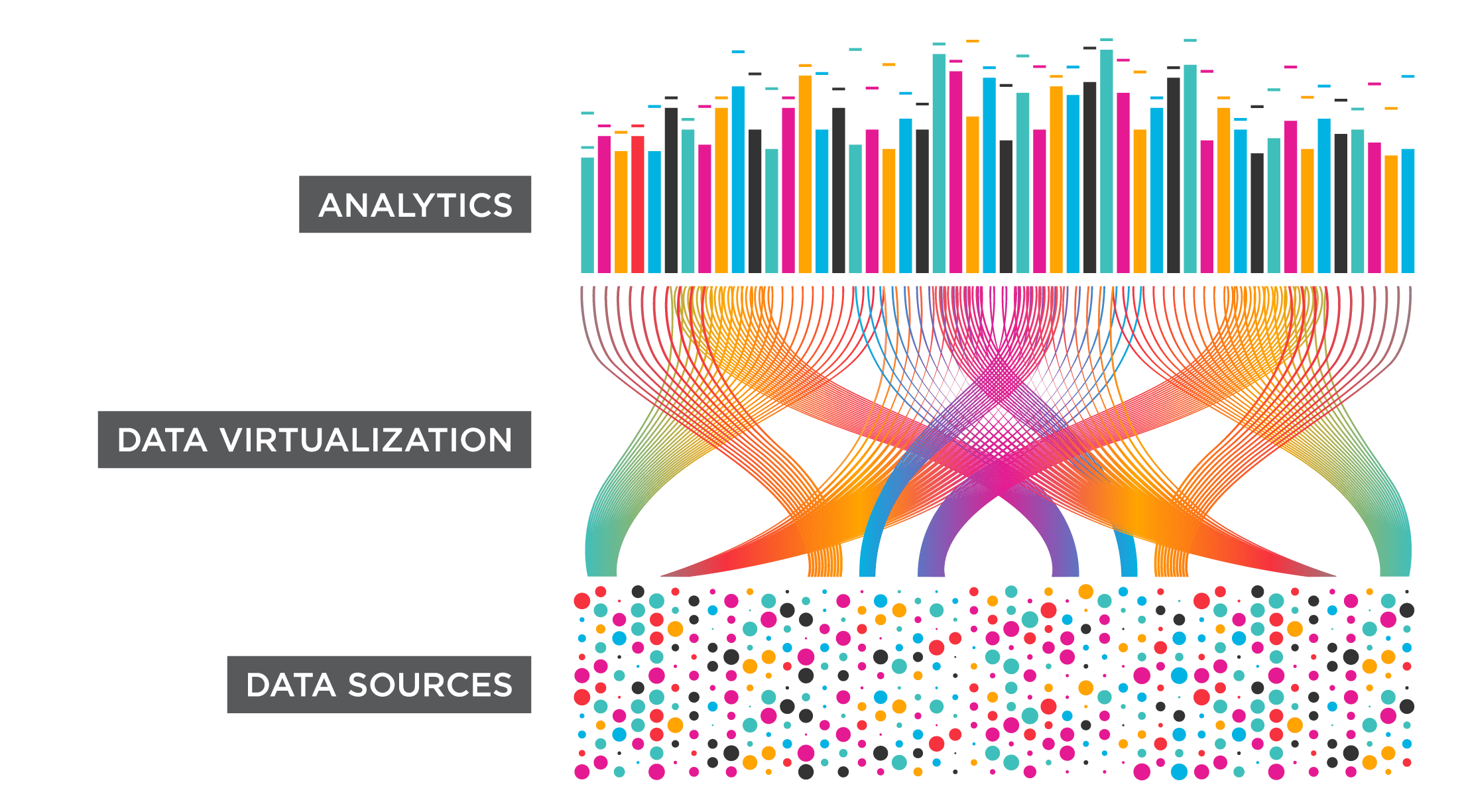

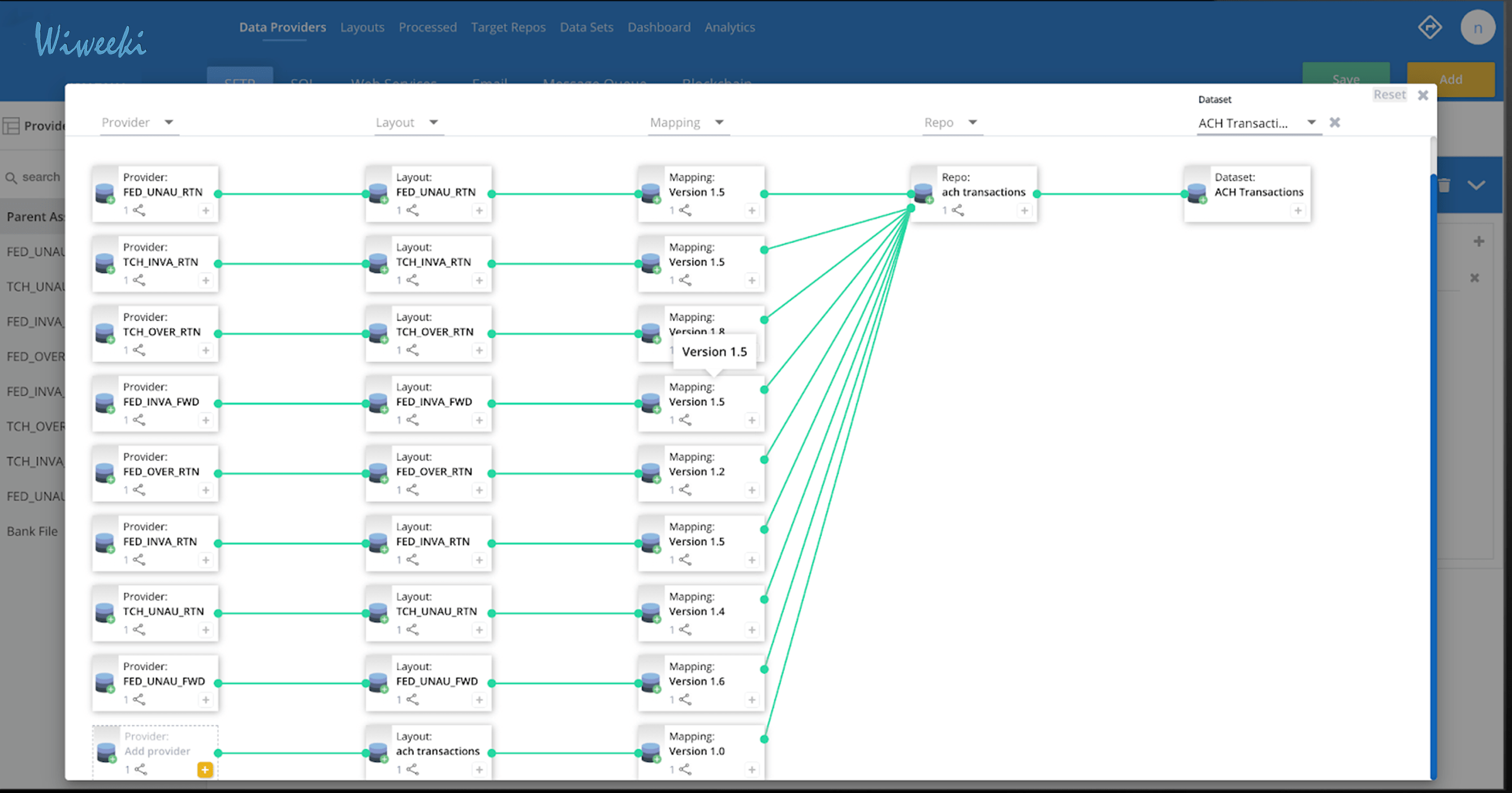

So far, we have discussed what data virtualization is and the challenges it aims to solve, but how does it solve those challenges? The experts at Wiweeki have mastered the data virtualization process, and they have created software that does just that. The product is known as Loxio, a business user-friendly self-service data lake, and analytics platform. Data virtualization is used in Loxio by bringing disparate data into one single source, either physically or virtually, that can be accessed by the business users as a single view. Loxio can bring in both structured and unstructured data to present to the end-user in a structured way. Loxio is capable of bringing in data in two different ways:

1. Extracting, transforming, and loading the data in from external sources to a local data lake

2. Query the data in real-time as the end-user needs it

Data is ready to be made available to the user after the data has been ingested and prepared. Then, we move to the data virtualization piece of the puzzle. “This is where we decide who gets access to what and how. So we are now creating different filters and giving access based on this,” says Mr. Kalluru. “We are bringing data from different data sources, augmenting that and providing that in the form of datasets, which is our word for data virtualization in Loxio.”

After the data reaches the target repositories, Loxio can bring all of that data together in a single dashboard. Loxio allows users to create visualizations with data from multiple sources. For example, if a user needs to create a report with data from a “Sales” Excel file and weather reports they can easily accomplish this task with all of the data right at their fingertips.

One of the main goals of Loxio was to eliminate the need for ETL tools and developers to put the power in the hands of the business user who will ultimately be making the important business decisions. Loxio’s UI/UX is made so that anyone can use it, regardless of technical skill. There is no coding involved, just a simple drag and drop functionality that puts data exploration at the fingertips of the business user.

Federal Procurement Data System

Data virtualization has been a part of the technology world for quite a while now, and so have these guys. Mr. Kalluru says, “We’ve been swimming in the data world for a long time,” 20+ years to be more precise. In fact, 20 years ago, both Mr. Kalluru and Mr. Winslow were involved in the development of the federal procurement management systems, USAspending, and FPDS-NG. “We provided data virtualization by giving the data dumps to people who are looking to get some data through multiple ways. We gave extracts of data, we gave them reports, we gave them a window into looking at an individual transaction. Then, we gave them access to search our database, not download anything, but just to search and read on our system itself,” says Mr. Kalluru.

Lockton’s RxMart 2.0

Their data virtualization journey has not stopped there. Wiweeki has recently worked on Lockton’s RxMart 2.0. One of the challenges was significantly improving their reporting and analytics process to make more informed decisions regarding prescription insurance plans. They have a data store of all the prescriptions that were ever filled for their clients. The prescription information includes the drug, pharmacy, patient, prescriber, and cost information. “You can’t do a whole lot of analysis on that so what we did was we virtualized the data,” says Mr. Winslow.

“We combined that data with geography data based on the pharmacy, detailed drug data from Medispan, and detailed pharmacy data from NCPDP. We took those disparate data sources and combined them into a single dataset and they can do a lot more analysis on prescription drugs. Because their whole job is to figure out how to get their clients a better prescription insurance plan, they need to know what the client is buying, how sick they are, and how much they’re spending to negotiate better plans for them. The prescription data itself was just simply not enough data. So that’s where we did a whole lot of the data virtualization, to get them a fuller dataset that made their analysis easier.”

In the end, Mr. Winslow could have gone on and on about all of the projects he has worked on using data virtualization, but to simplify his expertise, he says “I don’t know when it’s not needed. I’ve never been in a project where we didn’t virtualize data. Every time there’s data from disparate data sources, you have to combine them to somehow make them useful.”

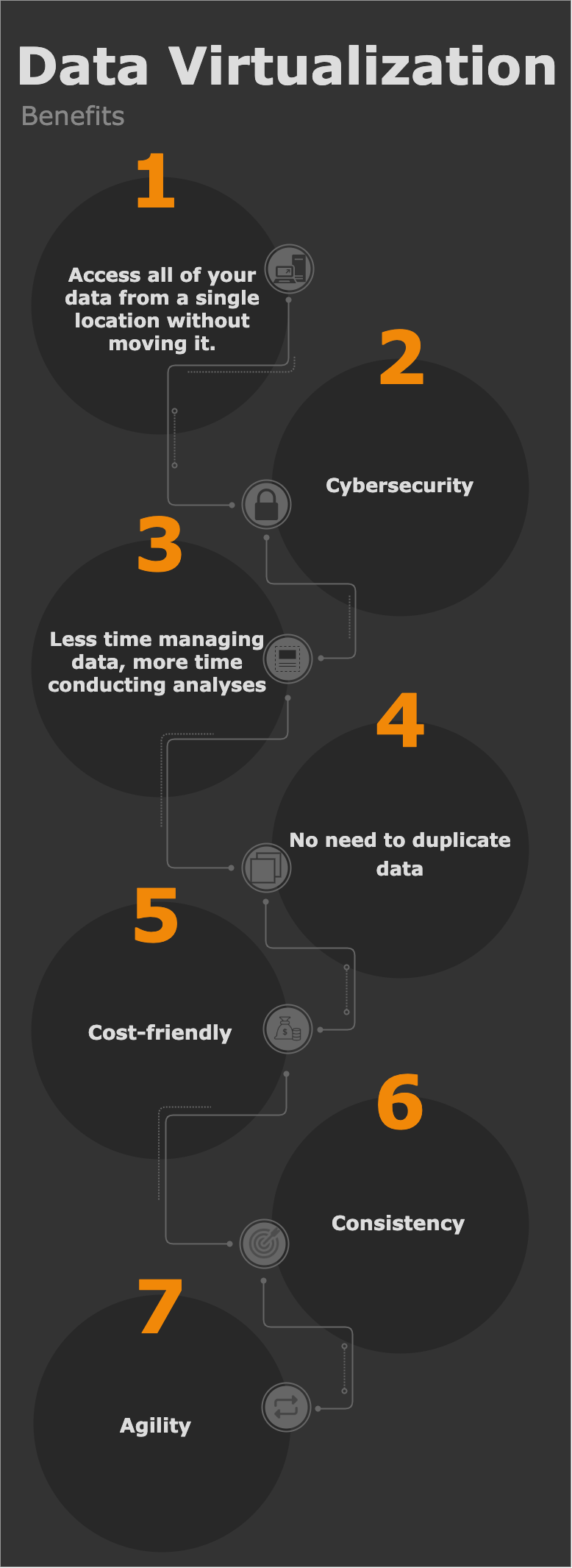

Benefits of Data Virtualization

Data Virtualization technologies offer business users a wide range of benefits:

1. The ability to access all of your data from a single location without having to move the data by building complex ETL pipelines.

The process of physically moving data can disrupt the downstream systems. Data virtualization allows you to lift and shift to new data platforms without downstream users ever knowing.

2. Cybersecurity

Physically moving data from one source to another can expose your sensitive information and IP address to cybersecurity threats. Data virtualization significantly reduces these threats due to the lack of data movement.

3. Companies spend significantly less time managing data, and a lot more time conducting analyses to answer important business questions and make smart business decisions.

When companies are continuously moving their data, more time gets spent preparing the data to make it usable, rather than conducting analyses. According to research conducted by Atscale, another leader in data virtualization technologies, “On average, companies spend 42% of their time on data profiling and preparation, another 16% of their time on data quality monitoring, and only 12% of the time on analysis.”

4. “There’s no need to duplicate data in your system.

Once you duplicate the system, you will get into these problems of reconciliation and ‘is my data up to date?’ The data provider is the one who is the authoritative source. If you make a clone of the data, and the data provider has changed the data, then you have to get into this constant business of reconciliation with the authoritative source. So, data virtualization gives you the ability of a virtual view into the data, you are always looking at the authoritative copy of the database. So, data maintenance and reconciliation are no longer needed,“ according to Mr. Kalluru.

5. Cost friendliness

Because business users can run multiple environments on one piece of infrastructure, the physical infrastructure is reduced. Therefore, business users don’t have to maintain as many servers and run as much electricity, which in return, reduces that dreaded electricity bill.

6. Data virtualization provides businesses with a universal interface so that all the different business teams can generate consistent answers to the same questions.

Consistency is crucial for delivering the most accurate, up-to-date data that satisfies the business’s needs. Before data virtualization, many steps were involved in the data gathering process along with many different people and teams managing that data. This often resulted in inconsistent reports that did not meet the requirements of the business, therefore requiring the team to repeat the process over again. Of course, until data virtualization came along.

7. Data virtualization can provide agility to business processes by allowing reports to be based around a centralized logic, allowing multiple BI tools to be used for data acquisition, and speeding up the information delivery.

Agility has become a very popular methodology for many businesses because of its iterative nature and the ability to continuously improve your products, processes, and services. With data virtualization, turnaround times are significantly reduced. Mr. Kalluru says, “The speed at which you can actually use the data is faster. You don’t have to worry about copying data into your system which takes time. Data virtualization is simple. You make a call and you get the data back. It’s quick, fast, and you don’t even need all of the datasets. You just need a subset of the data you want to work with.”

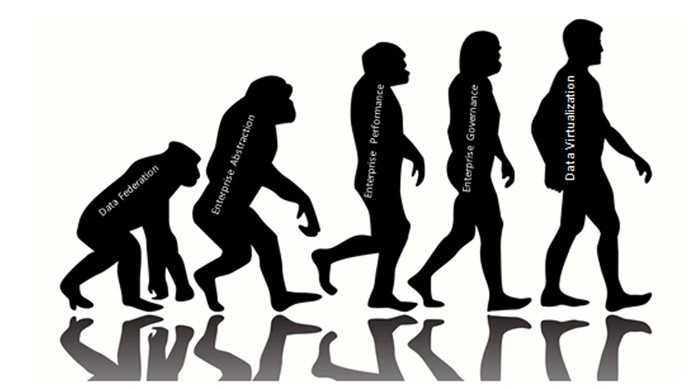

Data Virtualization – Past, Present, and Future

Past

It’s no surprise that data virtualization has significantly evolved over the years, as most technological innovations do. But how exactly has it evolved? According to Mr. Winslow, it’s the tools. “Back in the day, there were no data virtualization tools or anything, or at least that I was aware of, that did that,” he says, “The way you developed data warehouses was you had engineers write queries and the queries would get executed and then copied into a separate database to be used by the consumers. The queries could run against a local or remote database to get the data” It was a much more technical process than it is today in the world of automation.

Present

About today’s data virtualization, Mr. Winslow says, “Tools like Loxio now offer ease of bringing in disparate data, applying different rules, and providing the data governance needed to make sure that the data is accurate.” Today’s data virtualization puts the power in the hands of the consumer, rather than having to bring in a development team to do the work.

Future

We never truly know what the future holds, but we can make predictions. For Mr. Winslow, the future of data virtualization is this: “The tools that support data virtualization now will become easier to use. The process with large data sets is slow, so there will be some innovations there to improve performance.” One thing is certain, there will always be a need for data virtualization. “It’s not even an ‘I think.’ I know there will always be a need for data virtualization. Because data is going to be stored in the different data stores, organizations have multiple systems with multiple stores and they want to combine them, so they’ll have to virtualize the data. It will always exist.”

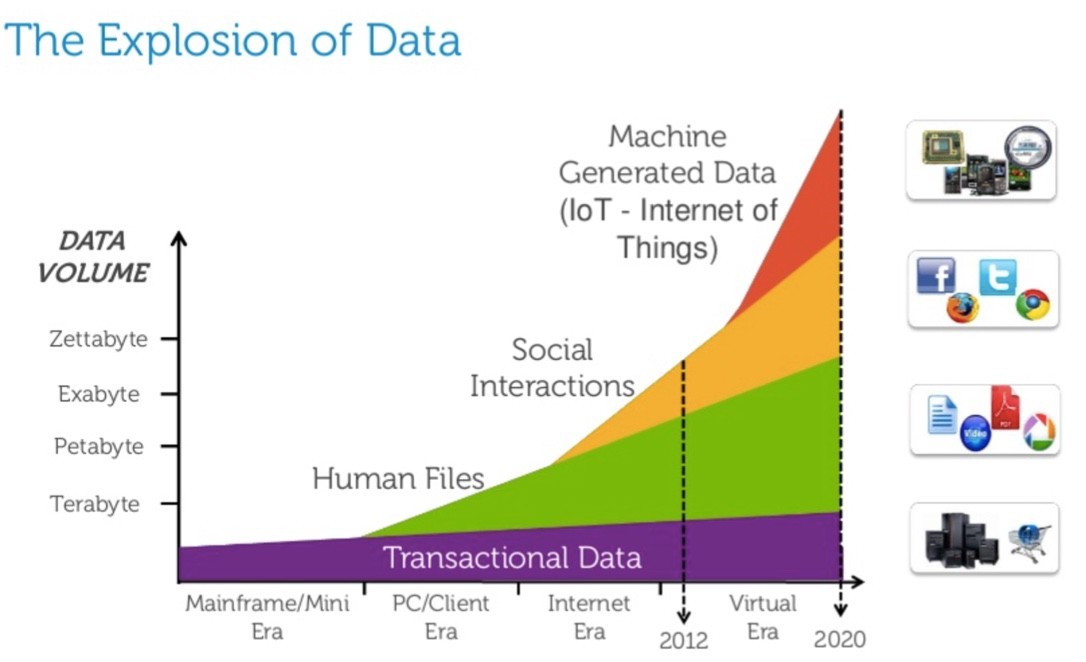

Not only will data virtualization continue to exist, but data itself will continue to grow. “Distributed datasets will continue to evolve. More and more datasets are going to come up. As the distributed data sets continue to grow, this data virtualization will be the key for us to access different data sets with ease, without replicating the data,” says Mr. Kalluru. Data is everywhere, all the time being generated from different sources. “Every time you use a phone, when you click a button, data is being generated. Every time you open a garage door that has an IoT device in it, it has data points in it. As IoT [Internet of Things] and things like that continue to grow, data is going to grow,” says Mr. Kalluru, “Data is going to be the king.” As data continues to grow, data virtualization will always be right behind it to give that data meaning.

Source: Why you should study big data

Sources

AtScale. “The Rise of the Adaptive Analytics Fabric.” AtScale, www.atscale.com/wp-content/uploads/2019/10/The-Rise-of-the-Adaptive-Analytics-Fabric.pdf.“

“Data Virtualization: An Overview.” YouTube, uploaded by Denodo, 26 March 2020, https://www.youtube.com/watch?v=3eWltRLA0ZY.

van der Lans, Rick F. “Data Federation.” ScienceDirect Topics, Elsevier B.V., 2012, www.sciencedirect.com/topics/computer-science/data-federation.

Virtualization Explained” YouTube, uploaded by IBM Cloud, 28 March 2019, https://www.youtube.com/watch?v=FZR0rG3HKIk.

“What is Data Virtualization?” YouTube, uploaded by intricity101, 03 October 2012, https://www.youtube.com/watch?v=6Ws-3dOGasE.